Aug 21, 2025

5m read

Running marimo on the cloud with Coiled and uv#

Coiled makes it easy to take anything you can run locally and run it in the cloud. That way, you have access to more machines, bigger machines, or machines that are close to your cloud data.

Many applications have an architecture where you run a local server, and then use the application in your web browser. Coiled supports this type of interactive use case with coiled run --interactive.

In this post, we’ll walk through how to use this to run marimo on a cloud VM.

For non-interactive work, or for work that you want to run in parallel across many cloud VMs, we recommend using coiled batch run.

Running in Docker on Your Local Computer#

First, we’ll see one way to run marimo locally using Docker.

You could use a Docker image that already has marimo installed, but we’ll use a small uv container and uvx to install marimo on the fly. It’s a great way to get started quickly, and it gives you a lot of flexibility. Also, these uv progress bars are addictive.

We can run a marimo notebook server locally via Docker using a uv container like this:

docker run --rm -it -p 2718:2718 \

astral/uv:debian-slim \

uvx marimo new --port 2718 --headless --host 0.0.0.0

This command will print a URL to access your marimo notebook in a web browser. Any computations we execute in that notebook will run on our local computer inside the Docker container that’s running marimo.

When we run marimo, we use --port 2718 to tell it which port to use for the web server; this needs to match the port we expose on the Docker container. We use --headless to tell marimo not to try automatically opening a browser window (which won’t work from inside Docker). Finally, we use --host 0.0.0.0 so that marimo doesn’t need to know the IP address that it’s serving on (which will be different inside and outside Docker).

Running in the Cloud#

Now that we know how to run marimo locally in Docker, it’s easy to run the same code (or anything else) in the cloud using Coiled.

Why run marimo in the cloud? When you’re running on a cloud VM, any compute you execute in the notebook will run on that cloud VM, not your local machine. The cloud gives you easy access to powerful hardware—big CPUs, lots of memory, or GPUs—and makes it easy to run computations close to data stored in the cloud, which can significantly reduce time and cost for moving data between the cloud and your local machine.

In fact, marimo recently launched molab, a free, hosted notebook workspace that’s fantastic for quickly trying things out without any setup. If you need more flexibility—running on your own cloud, choosing any instance type, or using GPUs—Coiled can provide it.

As before, we’ll use the astral/uv:debian-slim container with uvx to install marimo on the fly.

Instead of docker run, we’ll use coiled run, with just a few small tweaks to the arguments. Here’s the full command:

coiled run -it --port 2718 \

--container astral/uv:debian-slim \

-- uvx marimo new --port 2718 --headless --host 0.0.0.0 \

--proxy \$COILED_CLUSTER_HOSTNAME:2718

There’s no need for the --rm flag that we used with Docker to clean up the container on exit. On Coiled, when you exit marimo (either in the browser or by hitting Control-C in your terminal), Coiled will automatically shut down the VM and clean up.

For the port, we specify a single one with --port 2718. Docker needs to know which internal port to map to which external port, but Coiled only needs to know which port to open in the VM’s firewall.

We’re explicitly specifying to use a container with the --container option because, unlike Docker, coiled run supports other ways to ship your software environment to the cloud.

For the marimo command, we add --proxy \$COILED_CLUSTER_HOSTNAME:2718 so that marimo prints the correct URL for our cloud VM.

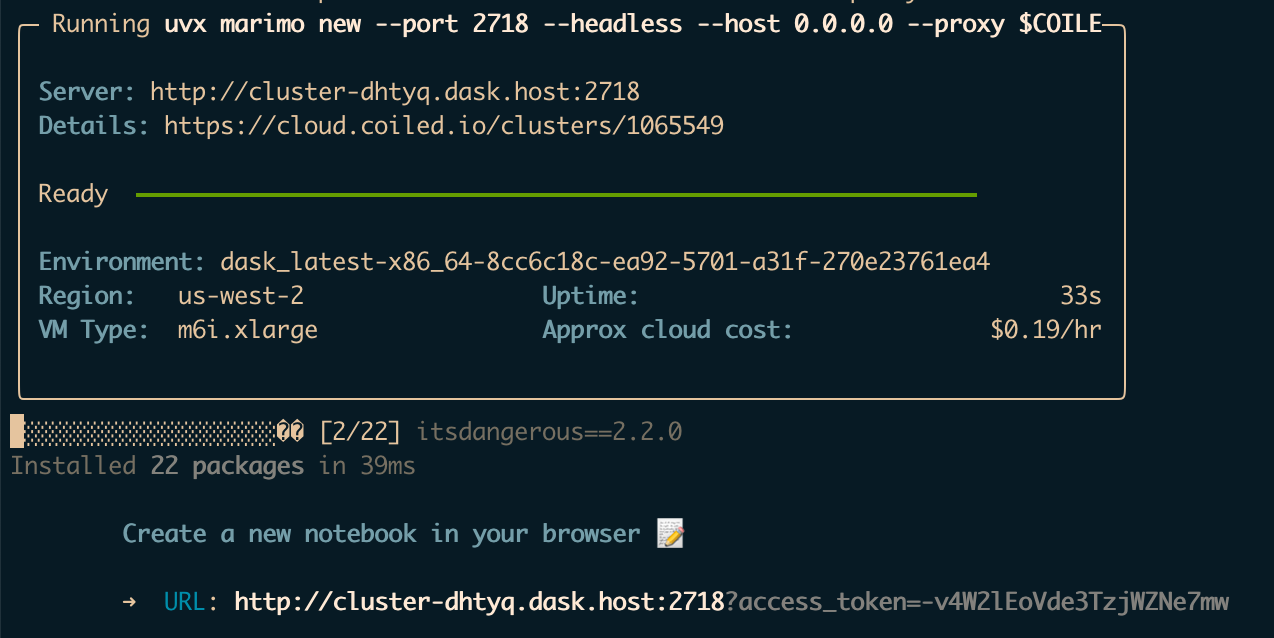

That’s it! Once Coiled prepares your cloud VM, you’ll get a link to use Marimo in your web browser:

Launching marimo with Coiled from a terminal.#

To shut things down, you can either use the shutdown button in the notebook or hit Control-C in your terminal. Once the notebook exits, Coiled will automatically shut down the VM and clean up everything in the cloud.

Sync Files#

To make the experience even smoother, we can sync our local files with the VM where marimo is running with the --sync flag. Coiled will sync files in the current directory with the directory where marimo is running on the VM. That way, the files you edit on the cluster will still be available locally when you shut down the VM. So here’s how we’d sync our local files:

coiled run -it --port 2718 --sync \

--container astral/uv:debian-slim \

-- uvx marimo new --port 2718 --headless --host 0.0.0.0 \

--proxy \$COILED_CLUSTER_HOSTNAME:2718

Install Packages Automatically#

We could use Coiled’s support for automatic package synchronization to install our local Python packages onto the cloud VM—it’s very convenient if you have a local-only package or an editable install[1]—but it’s not necessary here. Marimo automatically detects the packages required and offers to install them. Because uv is so fast, it’s fine to install them on the fly. (If you’re looking for those uv progress bars, they’ll be in your terminal, not in the marimo UI.)

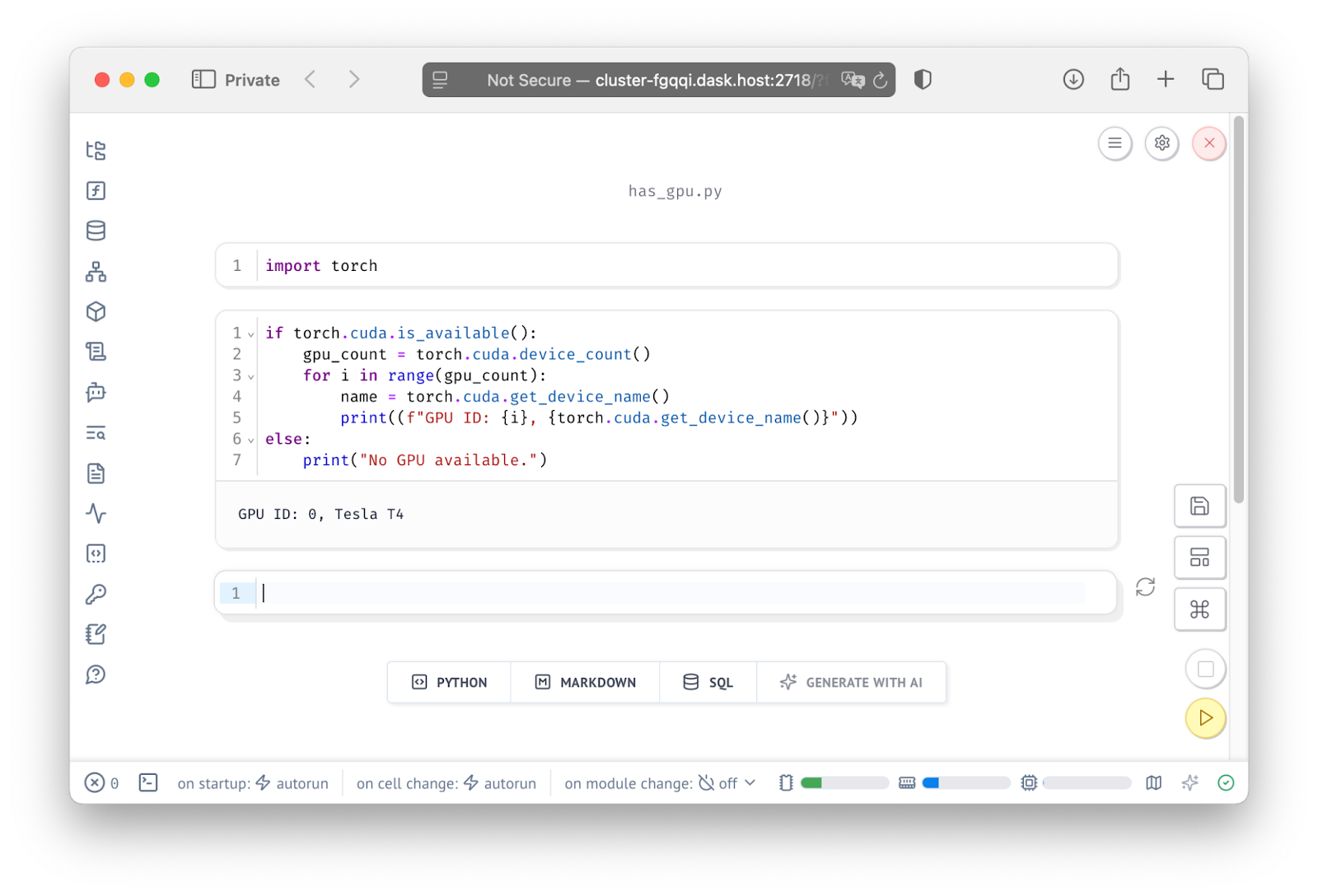

Run marimo on a VM With a GPU#

Coiled lets you pick any VM type your cloud provider offers, which means it’s easy to run marimo on a VM with a GPU. You can specify a VM type by name or specify --gpu to get the reasonable default machine with a GPU (g4dn.xlarge for AWS right now). Coiled (and uv in this case) will install the appropriate drivers and libraries to match your hardware.

coiled run -it --port 2718 --sync --gpu \

--container astral/uv:debian-slim \

-- uvx marimo new --port 2718 --headless --host 0.0.0.0 \

--proxy \$COILED_CLUSTER_HOSTNAME:2718

Launching with the --gpu option gives you a VM with CUDA-compatible GPU.#

Wrapping Up#

We used coiled run, as the replacement for docker run, to launch marimo in the cloud using a uv container. Coiled’s capabilities make the cloud feel like an extension of our local development environment. Combining coiled run with uv, is a powerful pattern to execute any Python application on the cloud, like OpenLLM.