Oct 6, 2023

2m read

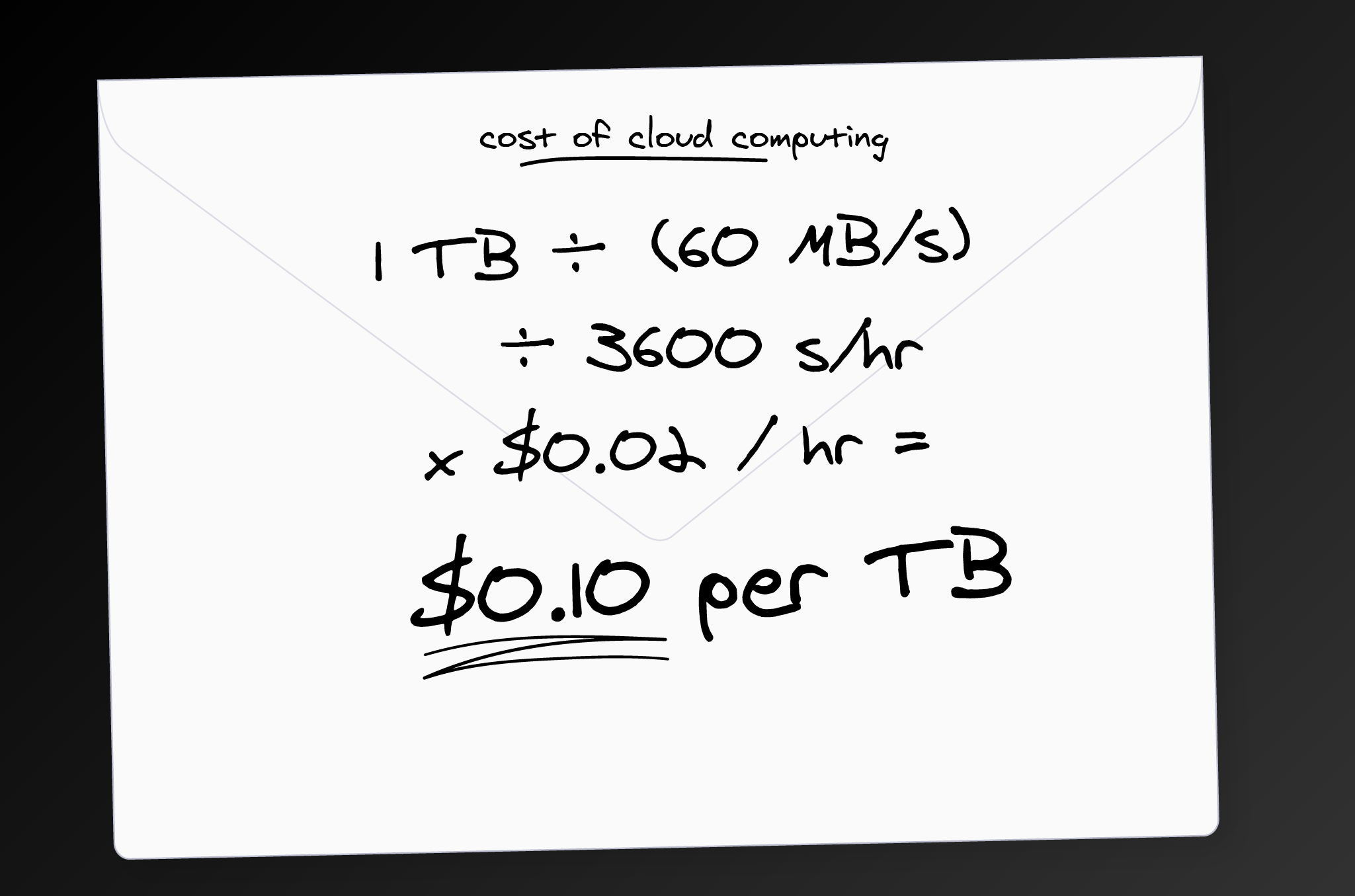

Ten Cents Per Terabyte#

The optimal cost of cloud computing

I often get asked the question:

Q: What should the following workflow cost in the cloud?

If the computation is some sophisticated ML workflow then the answer is who knows 🤷? We’ll have to run it and see. But most workloads are simple and so, when tuned, are primarily bound by S3 bandwidth. In this case the answer is pretty easy.

A: If you do everything right, the cost is ten cents per terabyte.

Let’s do a back-of-the-envelope calculation:

~60 MB/s S3 bandwidth for a single core VM

$0.05 per hour$0.04 per hour if you use ARM$0.02 per hour if you use ARM + Spot (

m7g.medium)

So 1 TB / (60 MB/s) / 3600 s/hr * $0.02 / hr = $0.10

This assumes linear scaling, which for these workloads is usually a good assumption.

But only if you do everything right#

We recently wrote about scaling an Xarray computation out to 250 TB. After optimization it cost $25, so right on target.

However, before optimization that workload didn’t run at all. After some work it ran, but was slow and expensive. It was only after more tuning that the workload ran at the magic ten-cents-per-terabyte number. Tuning is hard.

We see this often. People come with wildly unoptimized cloud workflows running on EC2 or AWS Lambda or Databricks or something, asking if we can make them cheaper. We’re often able to get these computations down to a few cents (most problems are small).

Common problems include:

Downloading data locally rather than operating near the data (Egress costs can be $0.10 per GB rather than TB)

Really inefficient pure-Python code

Many small reads to S3, especially with non-cloud-optimized file formats or bad partitioning

Provisioning big machines for small tasks

Provisioning small machines for big tasks and swapping to disk

Idling resources (the harder a system is to turn on, the more people avoid turning it off)

Not using ARM (recompiling software is hard)

Not using Spot (failing machines are annoying)

But of course, the biggest cost here is human time. People spend days (weeks?) wrangling unfamiliar cloud services (EC2, Batch, Lambda, SageMaker) unsuccessfully, at the personnel cost of thousands of dollars (people are expensive).

Optimize for Ease, Visibility, and Flexibility#

I like compute platforms that optimize for …

Ease of use and rapid iteration

Visibility of performance metrics

Flexibility of hardware

This combination enables fast iteration cycles that enable humans to explore the full space of solutions (code + hardware) effectively.

Putting on my sales hat, that’s roughly what we’ve built at Coiled for Python code at scale. It’s been a joy to use.